Features (human experience)

Visual modality: Color, shape, size, motion, texture (raw); patterns, symmetry, depth cues (derived)

Auditory modality: pitch, volume, tempo, rhythm (raw); speech patterns, tone of voice (derived)

haptics ? emotions ? language ?

Features (machine learning)

Numerical: Continuous or discrete values (e.g., height, number of words).

Categorical: Representing distinct groups (e.g., color, category labels).

Derived: Transformed or engineered values combining raw data (e.g., ratios, log values).

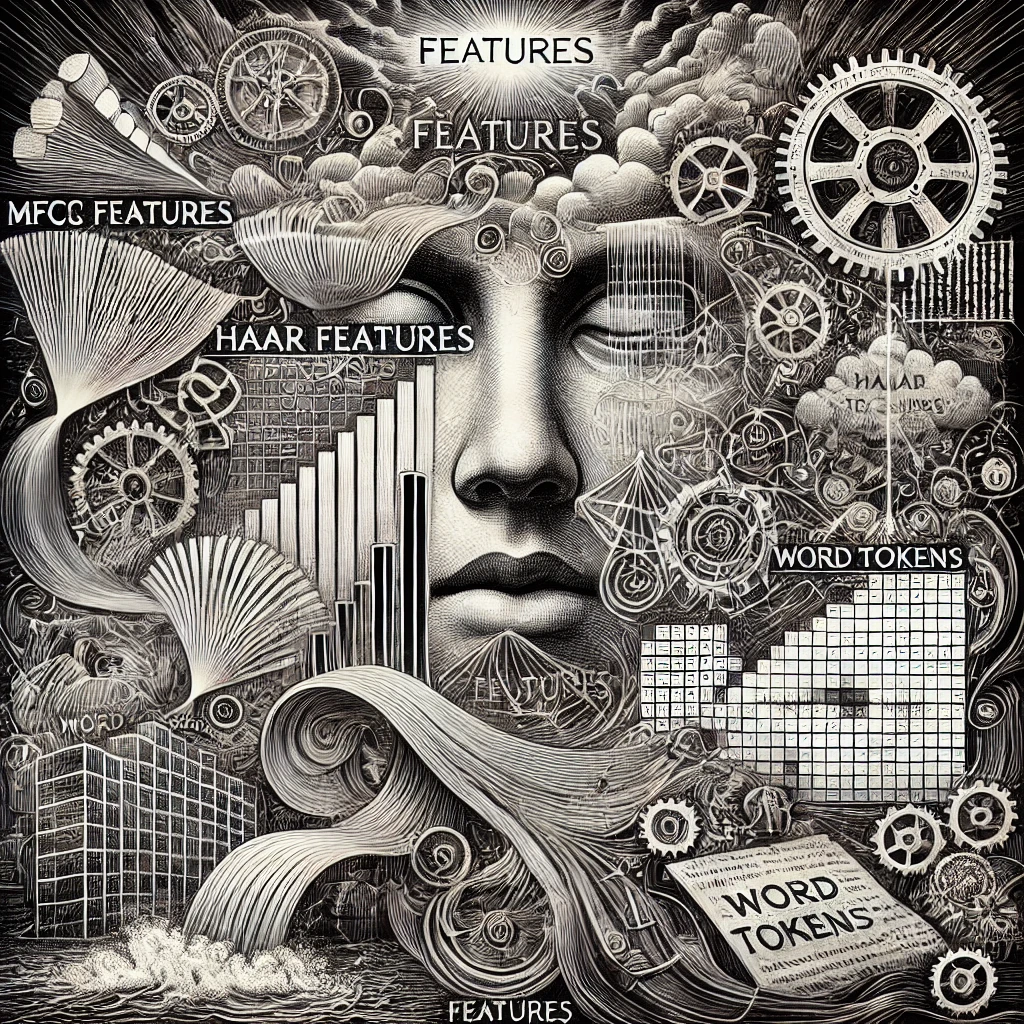

Text Processing

Raw Features: occurence of specific character sequences, word or token counts, sequence length

Engineered Features: Word "embeddings" (e.g., Word2Vec, BERT embeddings),

Context: In sentiment analysis, embeddings provide dense, meaningful representations of text features.

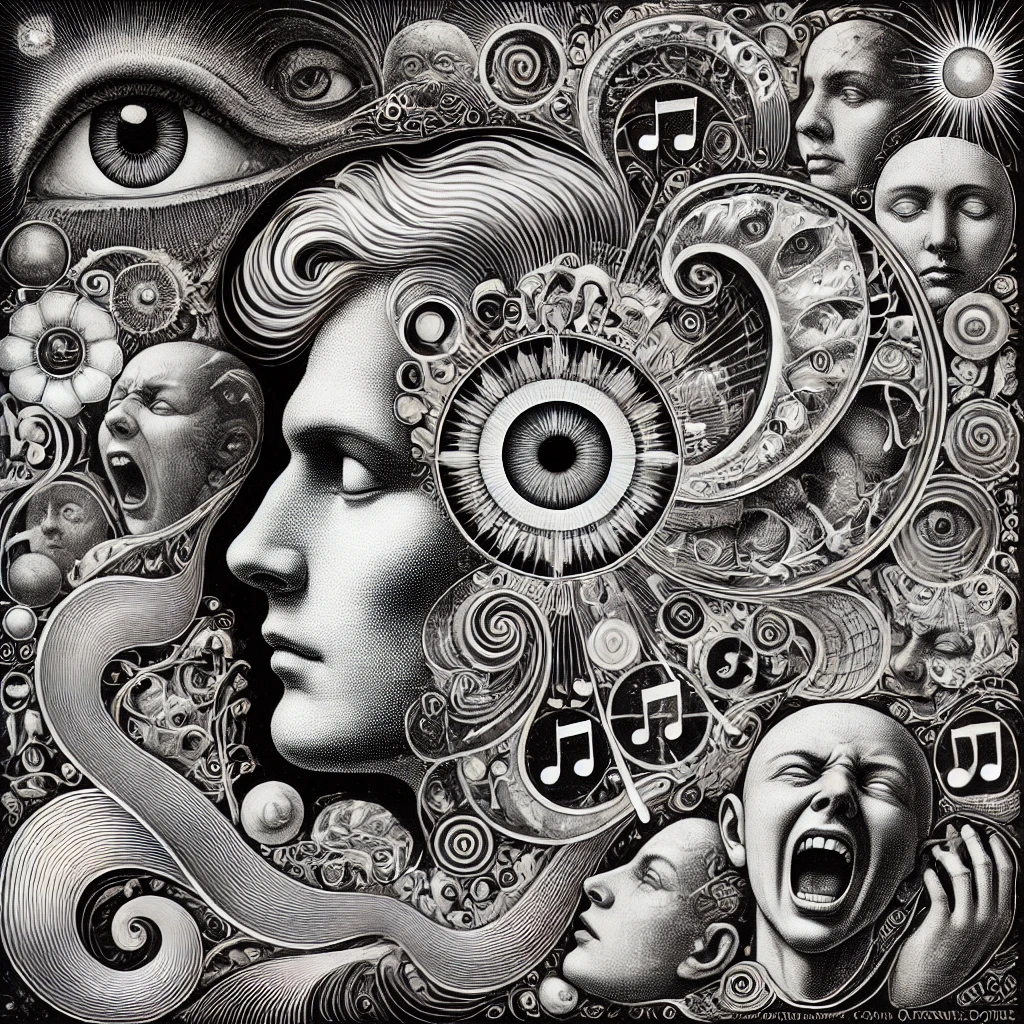

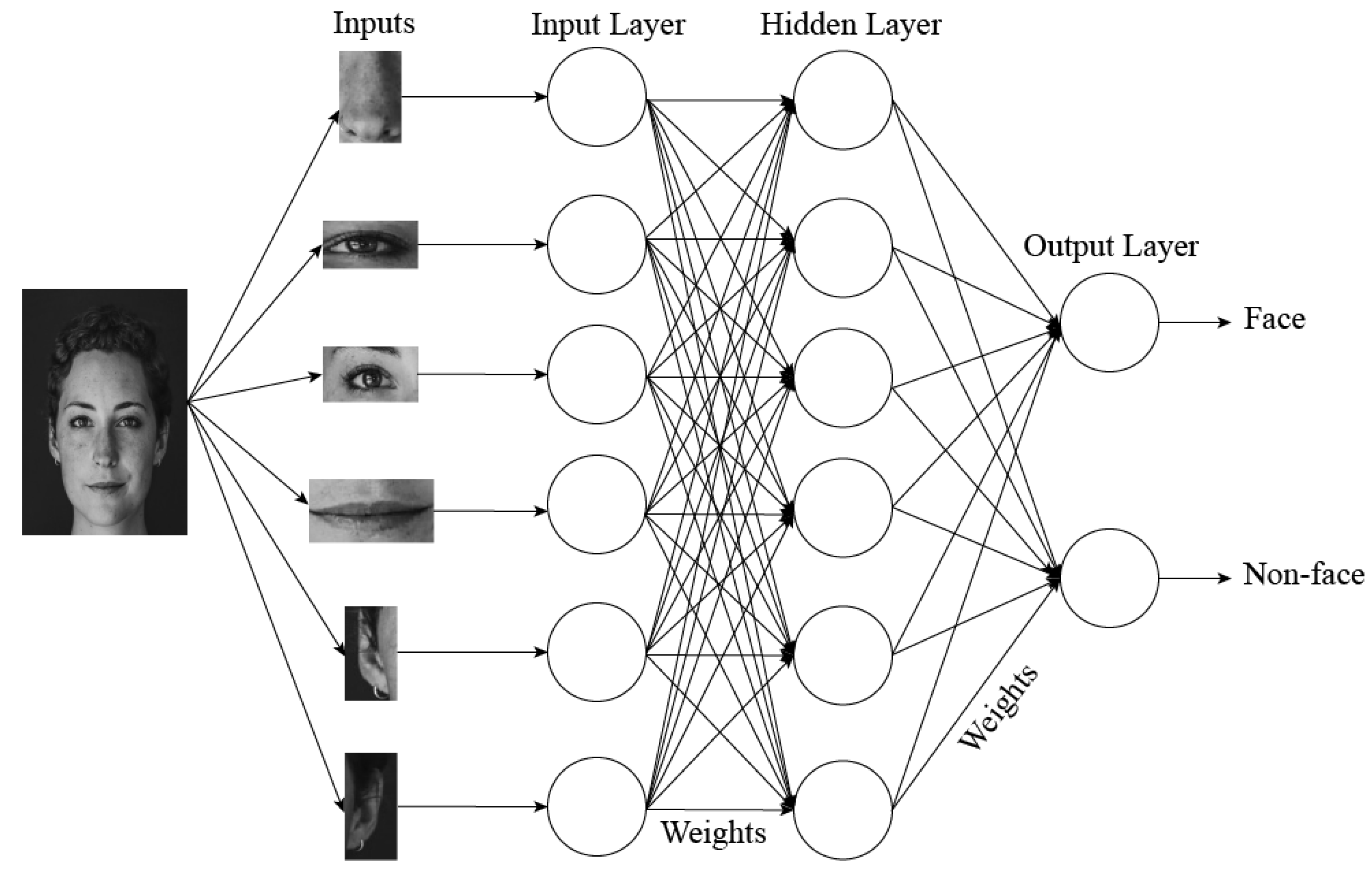

Image processing

Raw Features: Pixel intensity values, RGB color values.

Engineered Features: Haar features, Gabor wavelets, Histogram of gradients (HOG), edge counts, convolutional feature maps.

Context: In object detection, pixel patterns or edge-based features help detect objects in the image.

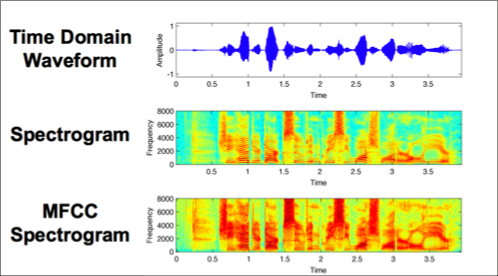

Speech processing

Raw Features: Waveform amplitudes, signal energy.

Engineered Features: Mel-frequency cepstral coefficients (MFCCs), spectrogram data, pitch.

Context: In speech recognition, MFCCs are features extracted to characterize the audio signal.

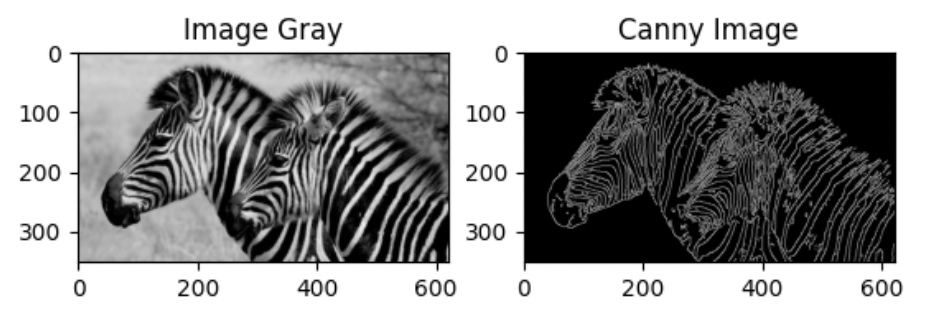

Feature detection

Canny edge detector is an old-school powerful means for contour feature extraction / detection.

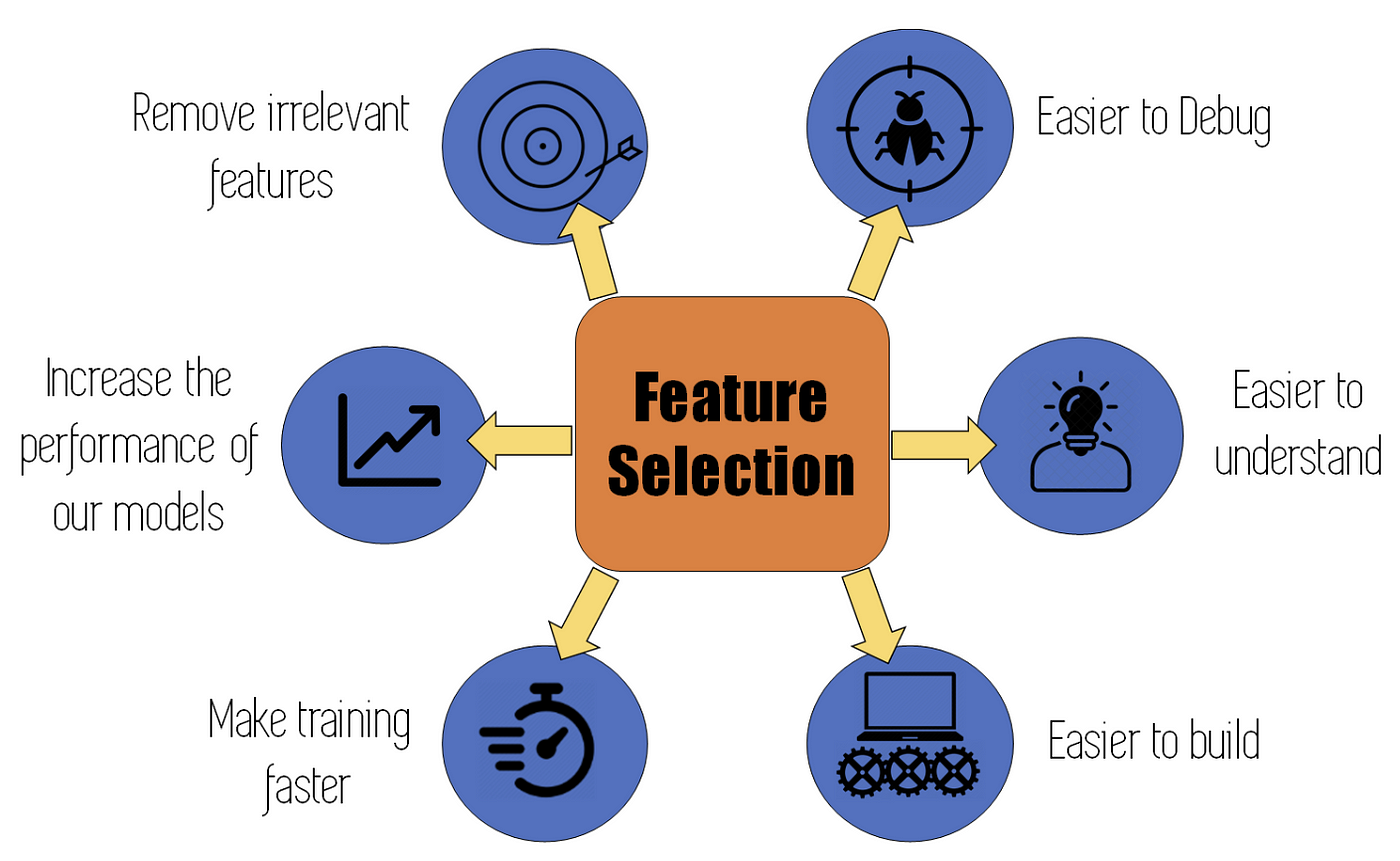

Feature selection

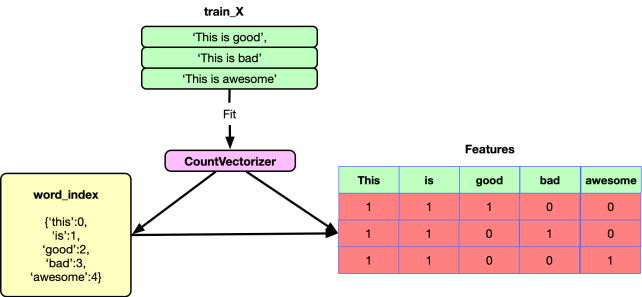

Testing

Supervised learning & Human learning

Categorization

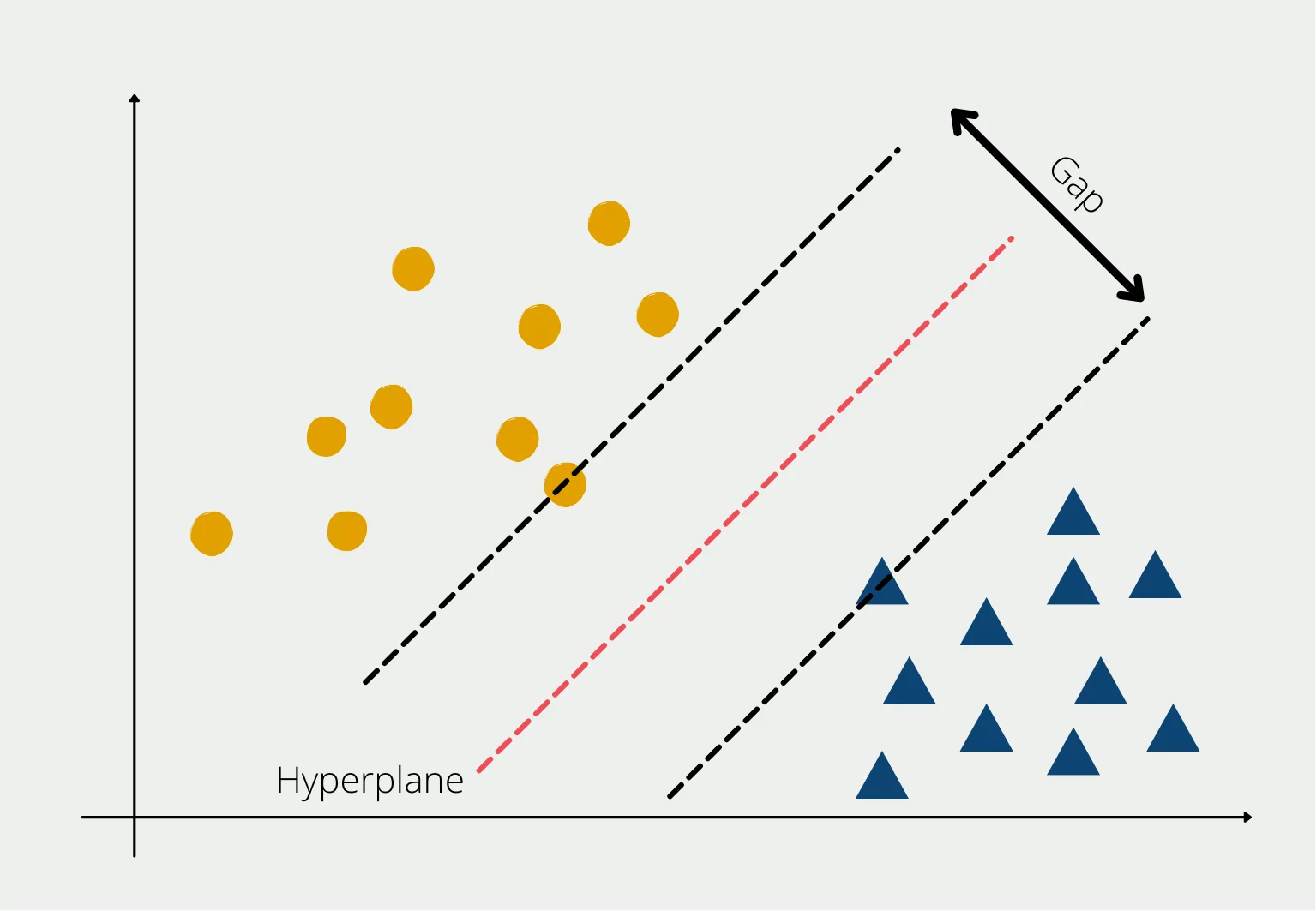

Classifiers

Support Vector Machines

Decision Trees

Exercicio: AI Unplugged 1

At the end ofthe training phase, the criteria formulated are exchanged with another team. Now, the students are shown the pictures of the remaining monkeys (test data) one after the other. For each image, the teams decide whether the monkey will bite or not using the scheme of rules developed by their classmates...

Training

Evaluation